Artificial intelligence != Biological intelligence

Humans don't drive using lidar but AI do. In fact, autonomous driving systems like Waymo use a combination of sensors including 4 lidar but also 13 cameras and 6 radar to drive safely on the road (arguably safer than humans). You wouldn't ask a human to review realtime data from 4 lidar 13 cameras and 6 radar systems to steer a car just as you wouldn't ask a computer to drive using a single pair of eyeballs which swivel around. Same task, different sensors. Artificial intelligence is different to biological intelligence and should be leveraged accordingly. Neural networks can discover and process patterns from superhuman amounts of data. As a general rule, we should beware of asking AI to make meaning from anthropocentric sensors when viable AI-centric sensors exist.

We are unwittingly handicapping AI success with anthropocentric sensors

A concrete example of where human-centric sensors handicap AI development I'll focus on are electrocardiograms (ECG) which I believe could be much improved with an AI-focused redesign.

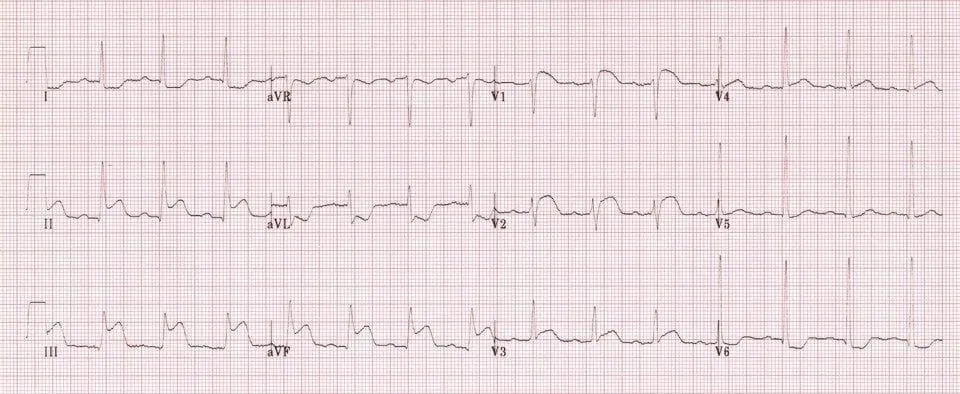

Standard 12-lead ECG pictured below:

An ECG is a medical investigation which uses the electrical current generated by the heart to assess function and pathology. It serves many purposes from identifying acute infarction/arrhythmias to diagnosing arrhythmias/structural defects to monitoring cardiac effects of drug toxicity. It is a cornerstone of advanced life support algorithms and arguably a fundamental marker of modern medicine. Fortunately, ECGs are widely available and cheap to administer so we have enormous amounts of physiological and pathological data available[1]. On paper it should be perfect for AI but I believe it's potential has yet to be realised. The models which exist are limited and lack external validation.

For a sense of the awesome[2] experience of learning to read ECGs it consists of memorising to apply rules like these:

- QRS duration > 120ms

- RSR' pattern in V1-3 ("M-shaped" QRS complex)

- Wide, slurred S wave in lateral leads (I, aVL, V5-6)

I don't doubt folks who are very good at reading ECG can interpret confidently and accurately, but it takes a lot of experience (and poorly read ECGs) before an individual can get to that point. Even then, the other thing is 12-lead ECG interpretation is far from perfect. Even specialist cardiologist have interobserver variation - not to speak of non-cardiologist physicians or junior doctors.

Human-centric sensors are often awkward for AI

Many don't know the standard 12-lead ECG is quite an opinionated investigation and far from ideal for AI to work with. It is beyond the scope of this post to cover at length but a modern ECG is far from raw voltage data measured from its electrode. Specifically, the time-series data which ends up in physiology datasets have been highly processed at both analog and digital front[3] to aid human eyes and brains in understanding the trace. We are asking computers to discover patterns from already highly opinionated hand-crafted features. It's as if we asked self-driving car companies to train only off of video from human drivers with GoPros strapped to their forehead[4]. If we fed models less processed data they would have a better chance at integrating and creating native patterns for our human needs[5].

AI-centric sensors give AI more agency to find patterns

Furthermore, there's no reason ECG designed for AI to interpret couldn't be 24-leads or 100 leads or 1000 leads. Yes, there may well be diminishing gains but you may also unlock new predictive power in the same way AlphaZero uncovered new chess strategies on its own[6]. Noting this idea has been tried to good effect in the past, I found studies which used an 80-lead ECG and found better detection of heart attacks compared to 12-lead. They pre-dates the explosion of AI and transformer architecture in recent years, I would certainly be curious to see if those authors are currently working on something like this now.

The takeaway is in a domain where AI is struggling, consider whether its data inputs have been handicapped by human-centric sensors and if so, redesign accordingly.

Steelmanning

Interpretability

AI-centric sensors are black boxes and not human interpretable in the way the standard 12-lead ECG is which means its findings will be less actionable even if it was more accurate.

This is a good argument against. By interpretability, I mean the degree to which humans can understand the reasoning and decision-making processes of AI systems. If the output of a new 100-lead ECG cannot be explained, it may not provide actionable yield and can only benefit in a medical paradigm more tolerant of blackboxed algorithms[7]. Perhaps there's a role for mechanical interpretability? Or we will need to accept a less explainable but more accurate medical paradigm? The biggest reassurance I have however is the 12-lead ECG didn't start off interpretable we had to build up observations and explanations, I feel like you could just inspect every neuron.

Human deskilling

AI-centric sensors will result in human deskilling, leading us to a point where we lose agency in our medical decisions

I think physician deskilling is a real problem as reliance on AI systems grows. Doctors may be less able to know when something is awry (e.g. procedure error resulting in data artifacts) and blindly follow the computer if the output itself is not considered to be interpretable. The other consideration as digitisation and computer reliance grows is the danger which adversarial attacks could be exploited in a more automated system. One way to stay is you could still have the standard 12 lead ecg within this new ecg system for humans to 'sense check' the bigger models outputs.

[1] https://www.physionet.org/about/database/ Ctrl/Cmd + F "ECG"

[2] /s

[3] Many clinicians don't think about this but a good place to start is to ask yourself why a 12-lead ECG only has 10 electrodes. (answer: we do synthetic lead derivation)

[4] A currently absurd but interesting idea nonetheless.

[5] Part of broader trend of scaling data instead of hand-crafted features eg the success of alphafold in predicting protein came from data and attention networks finding its own methods in the madness.

[6] https://www.idi.ntnu.no/emner/it3105/materials/neural/silver-2017b.pdf Remember how older chess engines trained chess experts moves? Well the leap in advancement came when the models played against itself and found new strategies in chess which humans had not previously considered.

[7] Would excel in a system where a person's health state is represented primarily as weights and biases. In the same way as there exists a representation of me as a vector which data brokers or big tech have to serve me advertisements they reckon I'll like (several I'm sure).